|

Working with Sebastian Risi, we tried to advance our understanding of how to combine the strengths of human intuition and machine intelligence.

(Click for subtitles!)

Published article:

Interactive Evolution of Complex Behaviours through Skill Encapsulation González de Prado Salas P, and Risi S. Proceedings of the 20th European Conference on the Applications of Evolutionary Computation (EvoApplications 2017) Machine learning has become popular thanks to impressive image-recognition algorithms, self-driving cars or by beating humans in difficult games, most notably in chess or very recently AlphaGo.

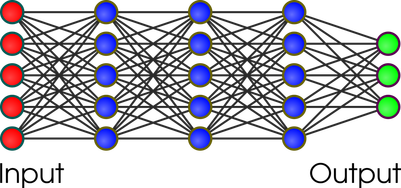

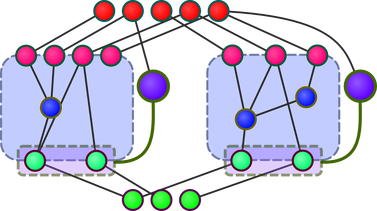

A popular approach is the use of deep networks. These networks have layers of neurons between input and output, and are great to process complex input, as in image-recognition. In image recognition each layer can produce more and more complex interpretation of the input. For example, the first layer might be able to find horizontal or vertical elements. The second may use this information to find circles and so on, until the network can classify input images into categories such as "cats" or "trees". Here you have a great website to visualize deep learning!

Deep network

Deep networks have a daunting amount of nodes and connections (say millions), so how do we know the strength needed in each connection for the whole thing to work? The answer is training. We feed the system a huge amount of known cases (images of trees and cats) and we use each known correct answer to twitch the connections so the system slowly improves.

The limitations of this approach are obvious: it is very costly (it requires lots of hardware and time), it uses enormous networks (when much smaller networks can do amazing stuff) and are not easy to adapt, meaning that if you want to use your chess champion to play a chess variation, you will have to redo most of the work. And we do not always have a good repository of examples with a known answer, or the problem may be even hard to describe in such terms.

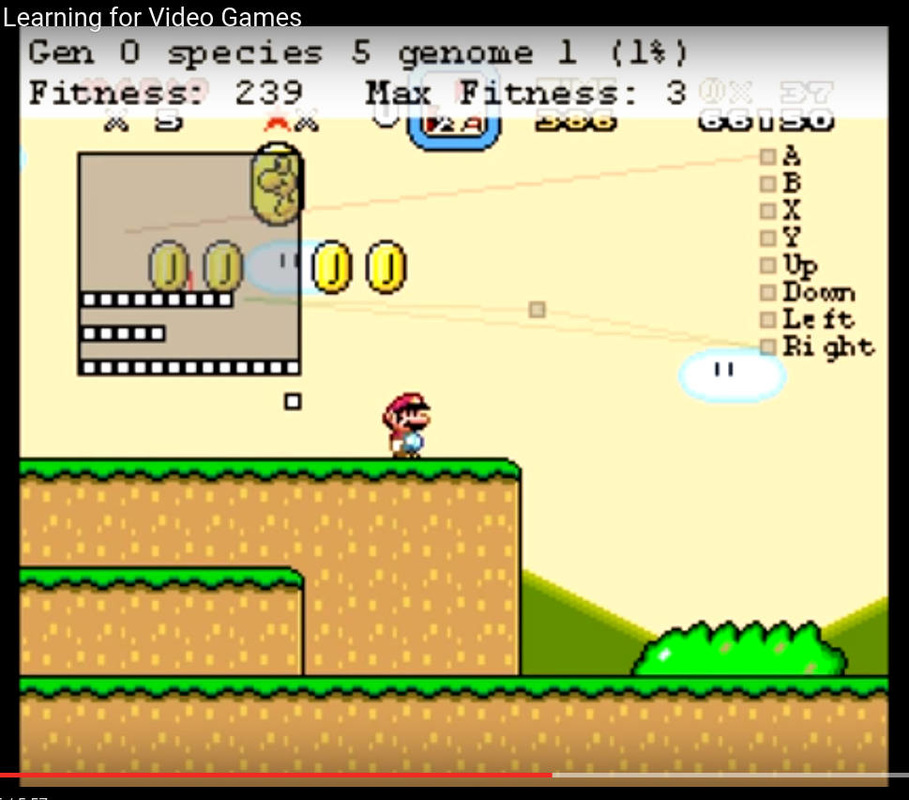

Another approach is neuroevolution. Neuroevolution takes inspiration, once again, in nature. The basic idea is to create many different networks (perhaps purely at random at the beginning) and then they are tested. Those that are more interesting are selected to produce offspring, which includes mutations. This process is iterated until a satisfactory result is found.

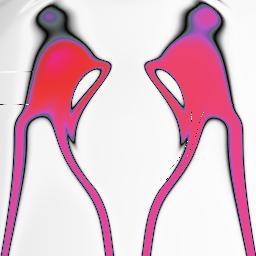

This method has been used for very different tasks, such as playing Super Mario, to explore creativity or in evolutionary robotics.

This evolutionary idea seems to promise a final solution: if a similar process (natural evolution) was enough to create real cats, why would neuroevolution not be able to solve simple computational tasks? Evolution is often guided by mathematical functions, but some problems are very hard to describe in these terms, specially if they are abstract (imagine evaluating art using maths). We are also unable to produce open-ended evolution yet.

In an open-ended situation, given an interesting network you should be able to continue evolving it to make it even better, adding new features. But trying to teach new tasks to a network usually means to forget old tasks, as evolution cannot know which elements are responsible for which behaviour.

To be precise, forgetting is usually reserved to learning, when the neural network is modified during the life-time of the agent, as opposed to evolution, where networks evolve through generations of individuals. However, the problem remains: trying to evolve new features in a neural network degrades previous abilities.

We are interested in using the powerful human intuition to improve the design process. A similar idea was used in the successful research game Foldit. However, while humans seem very good at developing an intuition for protein folding, understanding the structure of even small neural networks has proved challenging (try BrainCrafter). Is it possible then to make better use of the powerful design abilities of humans?

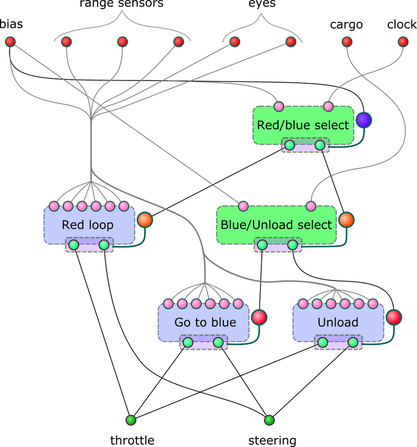

Our work builds on the ESP model. The basic idea is to structure the neural networks, creating modules that encapsulate tasks and protect sections of the network. Modules include a special type of neuron that will control when the module is going to be active.

By protecting simple tasks, this method allows to develop very complex behaviours. The main problem is that fitness-based evolution is not well suited for this approach. Fitness-based evolution uses a mathematical function to return a quality value for each individual in the population. If we break down our problem into many modules, each module will need its own fitness function.

To make things worse, complex behaviours are difficult to analyse in simple mathematical terms, so creating a fitness function may sometimes be as challenging as the evolutionary problem itself. In addition, not all fitness functions that are consistent with the problem will result in successful evolution!

For this reasons we are combining the modular approach with interactive evolution, in which users get to evaluate the performance of different individuals. Note how well this goes with open-ended evolution, where new goals may be defined as evolution progresses. Interactive evolution is also appropriate for artistic research and other fields that are highly abstract.

The main idea is to combine human abilities and algorithms to get the best of each world. In our case, algorithms are very powerful for exploration, so they are used in the evolution of the neural networks within the modules. Humans are great handling abstraction, which is more challenging for computers. For this reason human users get to decide the modular structure of the neural network and the relationship between modules. Humans are also needed to evaluate and guide the evolutionary process.

Results are very promising. Our model performs better than fitness-based evolution or traditional interactive evolution in our trials, but there is still a lot of work ahead for those to come!

|